[ad_1]

In the earth of information engineering, Maxime Beauchemin is another person who demands no introduction.

Just one of the 1st data engineers at Facebook and Airbnb, he wrote and open up-sourced the wildly well-liked orchestrator Apache Airflow, followed soon thereafter by Apache Superset, a facts exploration device which is getting the info viz landscape by storm. At this time, Maxime is CEO and co-founder of Preset, a speedy-growing startup that’s paving the way forward for AI-enabled information visualization for modern day organizations.

It is fair to say that Maxime has professional — and even architected — numerous of the most impactful information engineering technologies of the previous decade, and groundbreaking the info engineering function itself via his landmark 2017 blog site write-up “The Rise of the Information Engineer,” in which he chronicles a lot of of his observations.

In brief, Maxime argues that to properly scale data science and analytics, groups wanted a specialised engineer to handle ETL, build pipelines, and scale data infrastructure.

Enter the data engineer. The information engineer is a member of the info workforce principally targeted on developing and optimizing the platform for ingesting, storing, examining, visualizing, and activating significant amounts of details.

A couple months later, Maxime followed up that piece with a reflection on some of the info engineer’s major difficulties: the career was really hard, the regard was nominal, and the link between their get the job done and the real insights generated were being apparent but seldom identified.

Knowledge engineering was a thankless but significantly significant job, with details engineering teams straddling in between making infrastructure, managing work opportunities, and fielding advert-hoc requests from the analytics and BI groups. As a consequence, being a details engineer was equally a blessing and a curse.

In truth, in Maxime’s impression, the facts engineer was the “worst seat at the table.”

So, 5 a long time later on, exactly where does the area of information engineering stand? What is a facts engineer now? What do knowledge engineers do?

I sat down with Maxime to examine the present-day state of affairs, together with the decentralization of the modern-day details stack, the fragmentation of the data workforce, the rise of the cloud, and how all these factors have changed the role of the details engineer permanently.

The Velocity of ETL and Analytics Has Amplified

Maxime recollects a time not also lengthy ago when info engineering would demand managing Hive work for several hours at a time, repeated context switching concerning positions and taking care of unique elements of your details pipeline.

To put it bluntly, details engineering was dull and exhausting at the same time.

“This by no means-ending context switching and the sheer length of time it took to operate info operations led to burnout,” he states. “All way too frequently, 5-10 minutes of get the job done at 11:30 p.m. could help you save you 2-4 several hours of function the subsequent working day — and which is not necessarily a very good factor.”

In 2021, facts engineers can run large work opportunities quite speedily thanks to the compute power of BigQuery, Snowflake, Firebolt, Databricks, and other cloud warehousing technologies. This motion absent from on-prem and open up source options to the cloud and managed SaaS frees up facts engineering resources to operate on tasks unrelated to database administration.

On the flipside, costs are more constrained.

“It utilized to be quite inexpensive to operate on-prem, but in the cloud, you have to be aware of your compute prices,” Maxime states. “The sources are elastic, not finite.”

With data engineers no extended dependable for handling compute and storage, their part is switching from infrastructure enhancement to much more efficiency-based components of the information stack, or even specialised roles.

“We can see this shift in the rise of information dependability engineering, and details engineering currently being liable for handling (not developing) details infrastructure and overseeing the functionality of cloud-centered devices.”

It’s Harder to Gain Consensus on Governance – and That is Alright

In a earlier period of data engineering, details team structure was really a lot centralized, with info engineers and tech savvy analysts serving as the “librarians” of the info for the complete business. Data governance was a siloed purpose, and knowledge engineers turned the de facto gate keepers of data have faith in — regardless of whether or not they preferred it.

At present, Maxime suggests, it’s commonly acknowledged that governance is dispersed. Each crew has their personal analytic area they have, forcing decentralized group buildings around broadly standardized definitions of what “good” knowledge seems to be like.

“We’ve approved that consensus seeking is not necessary in all areas, but that does not make it any less difficult,” he says. “The data warehouse is the mirror of the corporation in several approaches. If persons really do not agree on what they contact things in the facts warehouse or what the definition of a metric is, then this deficiency of consensus will be mirrored downstream. But possibly that is Alright.”

Probably, Maxime argues, it is not automatically the sole accountability of the information team to come across consensus for the business, especially if the details is becoming employed throughout the organization in distinctive techniques. This will inherently lead to duplication and misalignment unless of course teams are deliberate about what information is private (in other words, only employed by a specific enterprise domain) or shared with the broader business.

“Now, distinct groups personal the info they use and deliver, instead of getting 1 central staff in charge of all facts for the business. When info is shared between groups and uncovered at a broader scale, there demands to be much more rigor all around furnishing an API for adjust management,” he suggests.

Which provides us to our up coming point…

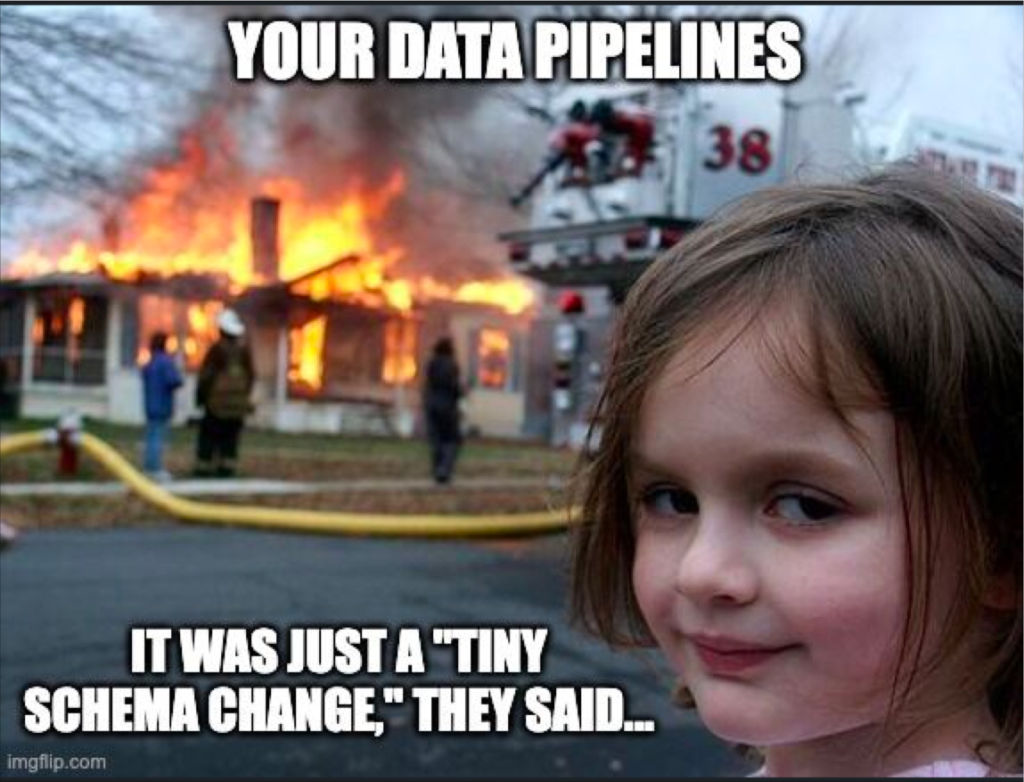

Improve Management Is Nonetheless a Issue – but the Proper Equipment Can Enable

In 2017, when Maxime wrote his 1st facts engineering post, “when information would improve, it would affect the full organization but no one particular would be notified.” This absence of alter administration was caused both by technological and cultural gaps.

When resource code or knowledge sets had been transformed or up-to-date, breakages transpired downstream that would render dashboards, stories, and other information solutions properly invalid till the challenges were being resolved. This info downtime (intervals of time when details is missing, inaccurate, or normally erroneous) was costly, time-intensive, and distressing to take care of.

All much too often, downtime would strike silently, and information engineering groups would be still left scratching their heads striving to determine out what went wrong, who was influenced, and how they could take care of it.

Presently, information engineering groups are increasingly relying on DevOps and software program engineering most effective practices to build more powerful tooling and cultures that prioritize communication and details reliability.

“Data observability and lineage has undoubtedly served information engineering groups recognize and correct challenges, and even floor facts about what broke and who was impacted,” said Maxime. “Still, modify administration is just as cultural as it is specialized. If decentralized teams are not pursuing processes and workflows that continue to keep downstream individuals or even the central knowledge system workforce in the loop, then it’s tough to tackle adjust efficiently.”

If there’s no delineation involving what info is non-public (made use of only by the knowledge area homeowners) or public (applied by the broader company), then it’s tricky to know who utilizes what details, and if information breaks, what induced it. Lineage and root result in examination can get you 50

For example, although Maxime was at Airbnb, Dataportal was created to democratize information access and empower all Airbnb staff to explore, understand, and trust information. Nevertheless, when the resource told them who would be impacted by info improvements via stop-to-stop lineage, it nevertheless did not make taking care of these modifications any a lot easier.

Facts Must Be Immutable – or Else Chaos Will Ensue

Data instruments are leaning seriously on software package engineering for inspiration – and by and significant, that is a very good detail. But there are a couple of things of details that make performing with ETL pipelines considerably different than a codebase. A single example? Editing facts like code.

“If I want to alter a column identify, it would be pretty really hard to do, for the reason that you have to rerun your ETL and improve your SQL,” said Maxime. “These new pipelines and data structures impact your process, and it can be really hard to deploy a transform, notably when a little something breaks.”

For occasion, if you have an incremental method that masses knowledge periodically into a extremely massive table, and you want to eliminate some of that details, you have to pause your pipeline, reconfigure the infrastructure, and then deploy new logic the moment the new columns have been dropped.

Information engineering tooling does not genuinely support you significantly, notably in the context of differential loads. Backfills can nonetheless be really painful, but there are some gains to holding onto them.

“There are truly very good issues that come out of preserving this historic track report of your details,” he suggests. “The old logic life together with the new logic, and it can be compared. You never have to go and crack and mutate a bunch of belongings that have been revealed in the earlier.”

Trying to keep critical data belongings (even if they are no longer in use) can deliver beneficial context. Of training course, the purpose is that all of these changes should really be documented explicitly over time.

So, choose your poison? Details debt or info pipeline chaos.

The Function of the Data Engineer Is Splintering

Just as in software package engineering, the roles and responsibilities of details engineering are shifting, notably for a lot more mature businesses. The database engineer is turning out to be extinct, with info warehousing requirements moving to the cloud, and details engineers are ever more accountable for handling info functionality and dependability.

In accordance to Maxime, this is in all probability a very good detail. In the past, the details engineer was “the worst seat at the table,” accountable for operationalizing the operate of another person else with tooling and processes that didn’t rather reside up to the demands of the organization.

Now, there are all types of new data engineering kind roles emerging that make this a minimal little bit simpler. Scenario in position, the analytics engineer. Coined by Michael Kaminsky, editor of Domestically Optimistic, the analytics engineer is a part that straddles data engineering and information analytics, and applies an analytical, organization-oriented method to performing with details.

The analytics engineer is like the facts whisperer, liable for ensuring that knowledge doesn’t stay in isolation from organization intelligence and investigation.

“The data engineer gets virtually like the keeper of great knowledge patterns. For occasion, if an analytics engineer reprocesses the warehouse at each run with dbt, they can establish bad practices. The data engineer is the gatekeeper, liable for educating facts teams on finest methods, most notably close to performance (dealing with incremental masses), information modeling, and coding requirements, and relying on info observability and DataOps to ensure that absolutely everyone is treating info with the same diligence.”

Operational Creep Hasn’t Long gone Absent – It is Just Been Dispersed

Operational creep, as mentioned in Maxime’s earlier posting, refers to the gradual increase of duties around time, and sadly, it’s an all-too-widespread truth for information engineers. While fashionable tools can assistance make facts engineers far more effective, they really don’t constantly make their life less complicated or less burdensome. In point, they can normally introduce more get the job done or complex debt around time.

Still, even with the rise of additional specialised roles and dispersed information engineering groups, the operational creep hasn’t long gone absent. Some of it has just been transferred in excess of to other roles as technical savvy grows and a lot more and far more features invest in information literacy.

For occasion, Maxime argues, what the analytics engineer prioritizes is not necessarily the same thing as a information engineer.

“Do analytics engineers care about the cost of running their pipelines? Do they treatment about optimizing your stack or do they generally care about offering the future insight? I never know.” He suggests. “Operational creep is an market trouble due to the fact, prospects are, the facts engineer will nevertheless have to handle the ‘less sexy’ things like trying to keep tabs on storage expenses or tackling details high quality.”

In the globe of the analytics engineer, operational creep exists, way too.

“As an analytics engineer, if all I have to do is to publish a mountain of SQL to fix a challenge, I’ll most likely use dbt, but it’s continue to a mountain of templated SQL, which tends to make it challenging to generate anything at all reusable or manageable,” Maxime states. “But it is nonetheless the choice I would pick out in quite a few circumstances since it’s straightforward and straightforward.”

In an best circumstance, he indicates, we’d want some thing that looks even far more like fashionable code for the reason that we can develop abstractions in a far more scalable way.

So, What is Subsequent for Details Engineering?

My discussion with Maxime remaining me with a good deal to believe about, but, by and substantial, I are inclined to concur with his factors. Even though info team reporting structure and operational hierarchy is starting to be additional and additional vertical, the scope of the data engineer is becoming progressively horizontal and focused on overall performance and reliability — which is in the end a great point.

A facts engineer will make $114,770 a calendar year, according to Glassdoor.

Concentration breeds innovation and pace, which helps prevent details engineers from seeking to boil the ocean, spin far too numerous plates, or usually melt away out. Additional roles on the facts team mean classic knowledge engineering responsibilities (fielding advertisement-hoc queries, modeling, transformations, and even developing pipelines) really do not require to slide entirely on their shoulders. Alternatively, they can emphasis on what matters: making certain that information is honest, accessible, and protected at every place in its lifecycle.

The shifting facts engineering tooling landscape demonstrates this go in direction of a much more concentrated and specialised job.

DataOps would make it straightforward to program and run jobs cloud information warehouses make it uncomplicated to shop and process details in the cloud facts lakes let for even a lot more nuanced and elaborate processing use cases and facts observability, like software monitoring and observability just before it, automates a lot of of the rote and repetitive tasks associated to data high-quality and dependability, delivering a baseline level of wellbeing that makes it possible for the overall info corporation to run effortlessly.

With the increase of these new technologies and workflows, engineers also have a wonderful option to have the movement in the direction of managing data like a merchandise. Constructing operational, scalable, observable, and resilient data programs is only doable if the facts alone is treated with the diligence of an evolving, iterative merchandise.

Here’s where by use scenario-specific metadata, ML-pushed data discovery, and equipment that can help us superior recognize what info in fact issues and what can go the way of the dodos.

At the very least, that is what we see in our crystal ball.

What do you see in yours?

[ad_2]